Temperature setting in OpenAI's API

OpenAI API for creators and entrepreneurs.

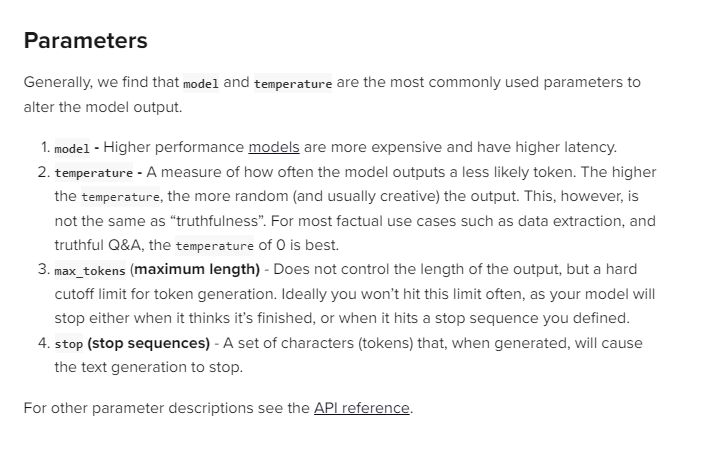

OpenAI's large language models (LLMs) use a parameter called 'temperature', ranging officially from 0 to 1. It dictates the model's level of creative freedom in generating responses. Think of it like a thermostat for the AI's creativity!

In practical terms, a temperature of 0% makes the model stick to common words and phrases. It's like a news reporter, sticking to the facts and well-known terms. The focus is on clarity and familiarity.

Conversely, a temperature of 100% lets the model flex its creative muscles, using more unusual and rare words. It's like a poet, exploring the language in novel ways. The focus is on creativity and originality.

However, it's not all smooth sailing. Due to the architecture of these models and their training datasets, the model can sometimes generate unpredictable responses. This is known as 'hallucination'.

ChatGPT can outright lie, with great confidence. It's called 'hallucinating'. Here it's claims to run data mining ops to generate #stock recommendation in a few seconds. I however do not doubt that #ChatGPT can perform such tasks, if developed further (e.g. Plugins, #AutoGPT ) https://t.co/rbz8jAuLAf

— Ishtiaq Rahman (@buildanything) May 26, 2023

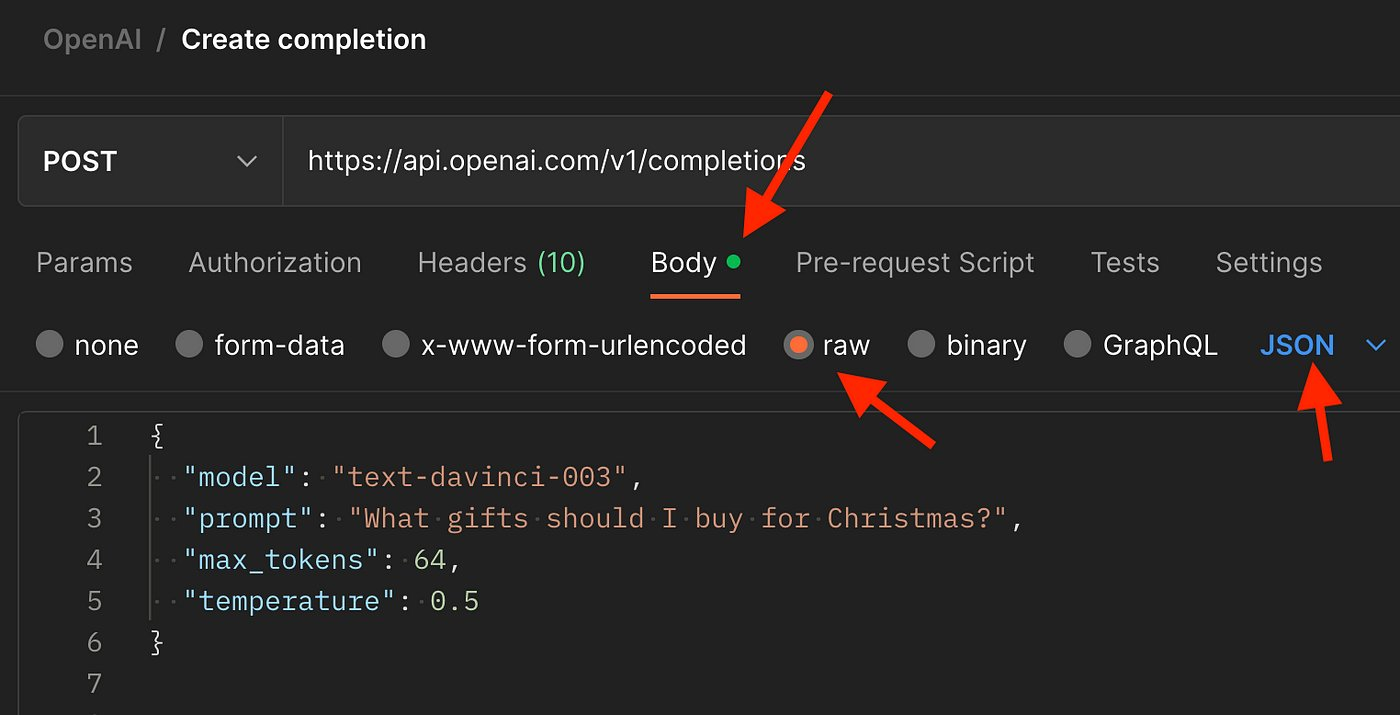

The official temperature range for OpenAI's LLMs is between 0 and 1. This is designed to balance creativity with coherence, allowing the model to generate human-acceptable responses. You're not able to select the temperate on ChatGPT, you can when you're calling the API.

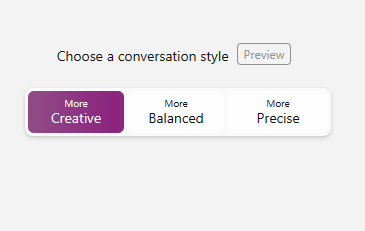

As an example, ChatGPT (GPT-3.5) operates at a fixed temperature of 0.7. This leads to a good mix of creativity and reliability, although it can sometimes result in hallucinations. Bing Chat allows 3 different temperate settings.

A temperature value above 0.9 (90%) tends to lead to responses that are too unpredictable and deviate too far from the prompt. These responses can be interesting, but they're often not useful for practical purposes.

So, while a high-temperature setting can be fun for creative or artistic applications, it's not typically recommended for tasks that require reliable, understandable responses. It's all about finding the right balance.

Reference:

1. OpenAI API documentation

Written using GPT4 w/ Browsing

✅Edited and fact-checked by humans.